We recently spoke with Michael Iantosca, who has led Avalara to achieve an unprecedented enterprise-wide technical content transformation in record time as the senior director of the Knowledge Platform and Engineering team. In this profile, we highlight key strategies he and his team have embraced in driving this transformation.

With over 42 years of experience in content management, including 38 years at IBM, Michael Iantosca began his journey as a hybrid information developer and systems programming engineer. His tenure there was marked by numerous successes, such as establishing the first multimedia labs and spearheading the development of IBM's structured technical publication systems. Notably, he played a pivotal role in forming the team that created the highly successful DITA XML document standard.

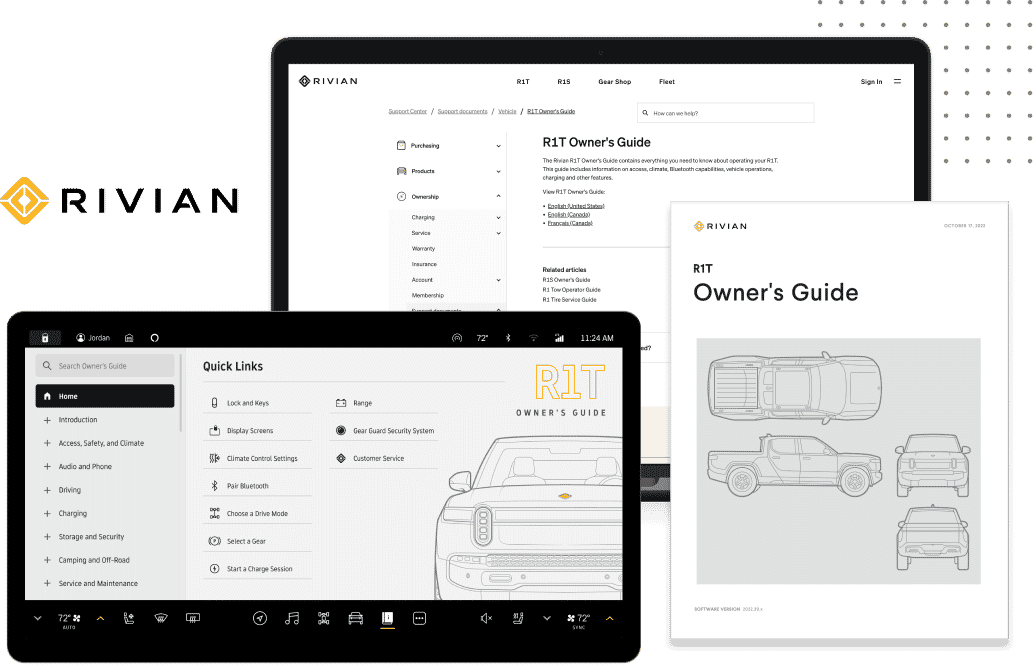

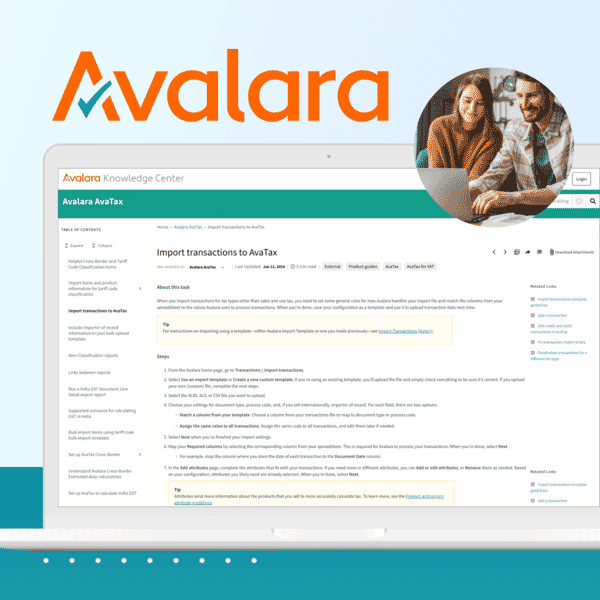

By late 2020, Michael had retired from IBM, but a compelling opportunity led him to join Avalara, a leading provider of cloud-based tax compliance solutions. The company had emerged in 2004, offering automated sales tax calculations (AvaTax), streamlined tax returns (Returns), and has since created an expansive portfolio of tax-related products and services. Now, Avalara needed a proven expert to construct what Michael describes as among the world's most efficient and advanced content supply chain. Avalara leadership recognized the need for transforming the content supply chain as an essential step for driving the company's trajectory toward becoming a multi-billion-dollar enterprise as the prior platform was unable to scale and unify content from across the enterprise.

The ambitious content supply chain endeavor aimed to establish an electronic publishing ecosystem that would unify the creation, delivery, and management of technical content across the enterprise within 18 months. Under Michael’s helm as Avalara’s senior director of technical content and knowledge platforms, the team has delivered on this objective by:

- Restructuring all technical product content into a componentized and structured format using DITA XML, the industry-standard document format that Michael helped shape.

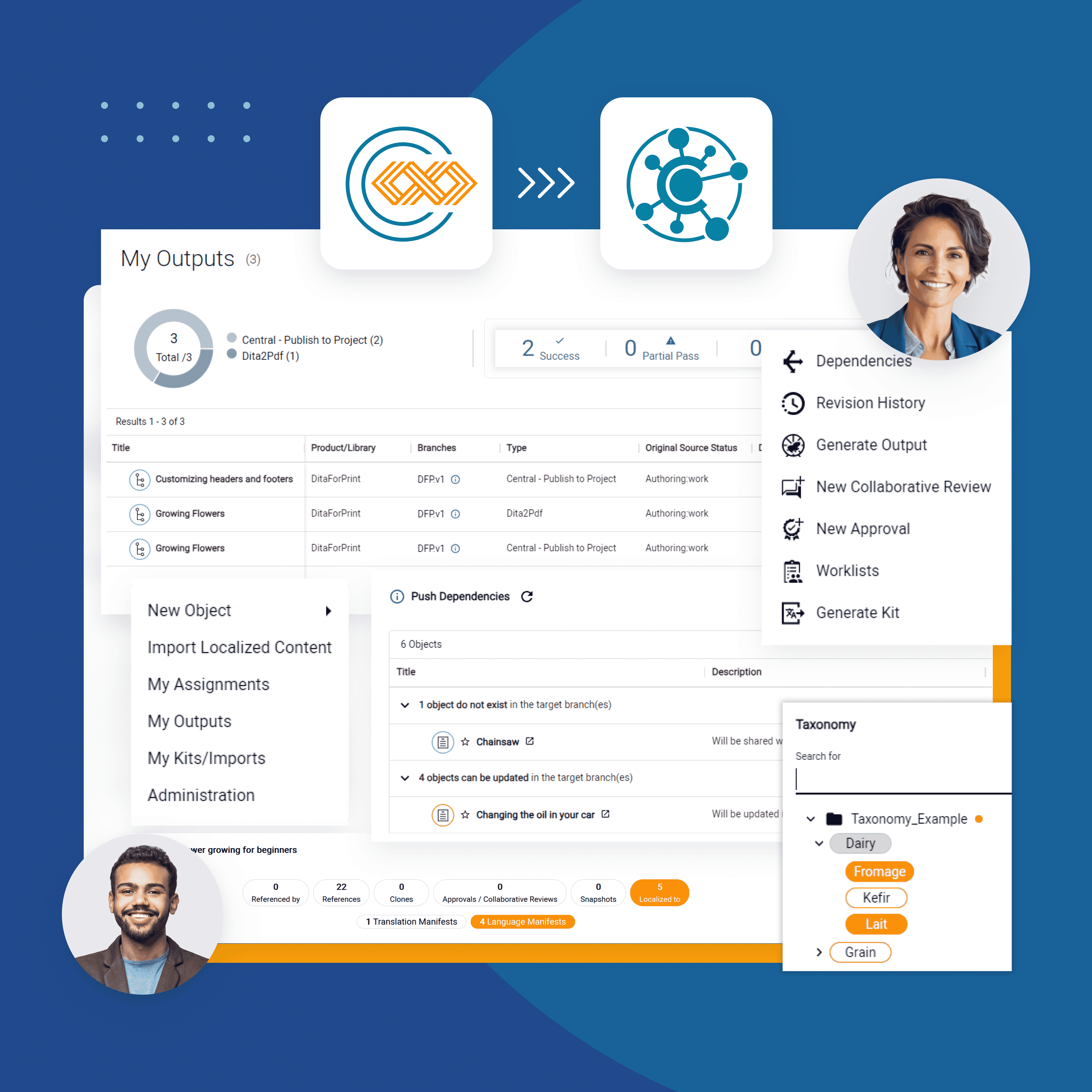

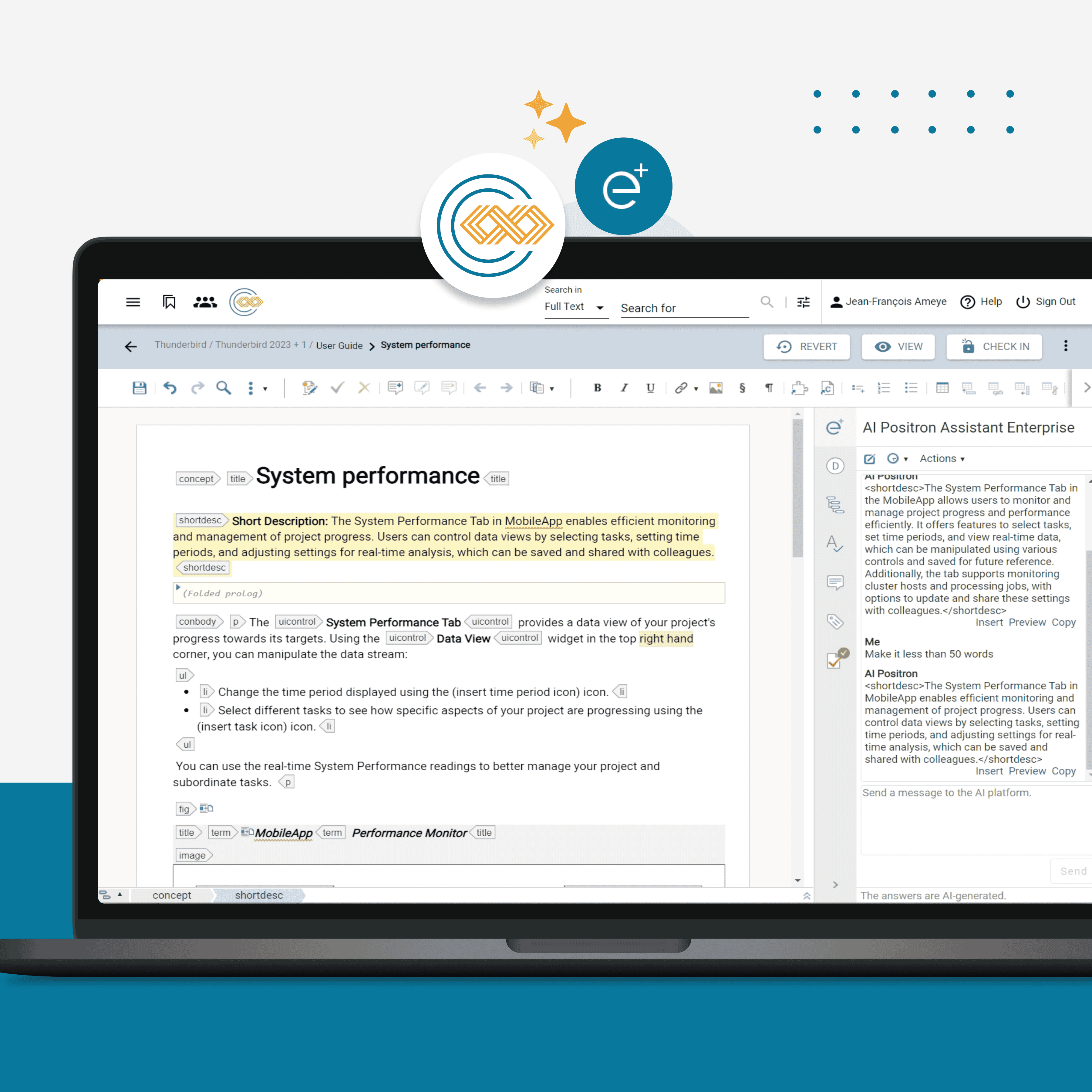

- Deploying the MadCap IXIA CCMS enterprise component content management system to facilitate content creation for numerous contributors across multiple continents.

- Replacing multiple content silos throughout the enterprise with a customized omnisource/omnichannel content delivery (CDP) mesh based on ZoomIn, dubbed "The Content Lake" by Michael, along with a new knowledge center portal and in-product user assistance.

- Developing unified enterprise taxonomies and ontologies for content and various business units utilizing Semantic Web Company's PoolParty semantic suite.

- Introducing an AI-driven content governance program and technology, powered by Acrolinx, to ensure consistency in style, editorial quality, terminology, and search optimization across all content.

- Spearheading the company's inaugural enterprise-wide content globalization initiative, establishing an automated localization supply chain for content and applications based on the XTM translation management system (TMS).

Executing on the Vision of Content-as-a-Service

Avalara’s content supply chain implements Michael’s vision of Content-as-a-Service, where content flows like electricity, and the content supply chain is the grid. All systems and services are fully integrated with connectors and workflow management. Despite its comprehensive nature, the solution was implemented within 18 months at a time when smaller initiatives have typically taken an average enterprise several years to complete.

"Throughout my career at Big Blue, I implemented multiple generations of these systems, building some of them from scratch,” Michael said. “With a team of seasoned content professionals and engineers, coupled with the support of senior management, the path ahead was clear. I never once doubted that we could pull it off."

However, building a state-of-the-art unified technical content ecosystem that most of the world’s most well-known companies still lack wasn’t Michael’s real end game.

“My real goal has been to lead the content industry into the world of generative AI, hyper-personalization, and busting the “other” half of the content silo conundrum – unified enterprise content operations,” Michael explains.

Before delving into the innovative projects though, the team had to get past all the core transformation work.

“It’s the elephant in the room that no one in the content industry wants to talk about,” says Michael. “Companies have been so mired in busting content creation and delivery silos that they’ve forgotten or have ignored content operation silos. Just the thought of tackling fragmented operations is daunting.”

Michael highlights a common issue within organizations: the compartmentalization of content operations across various functional teams—such as product, support, learning, developer, partner, and pre-sales. This fragmentation extends to the creation and delivery of content and its planning and management processes.

For example, Michael notes, ask anyone in virtually any company if they can produce a comprehensive inventory of all customer-facing technical content related to any product or service from across the enterprise, and you’ll almost universally receive a puzzled expression or a cynical chuckle in response. Meanwhile, customers are indifferent to the internal divisions responsible for content production. They want the right content, at the right time, delivered to the right person, and in the right experience, irrespective of which part of the company created it.

The disconnect points to the need for an integrated approach to content operations—a central system of record that amalgamates content plans, processes, systems, and services. Such a system would ensure cohesive management of what Michael terms, “the Total Content Experience" (TCE) to bridge the gap between disparate team efforts and the unified content experience customer’s demand.

“Envision a seamlessly automated, workflow-driven ecosystem for content operations, akin to a mission control center but for enterprise content," Michael explains. “This is the concept of ‘Content Central’.”

My real goal has been to lead the content industry into the world of generative AI, hyper-personalization, and busting the “other” half of the content silo conundrum – unified enterprise content operations.

Michael Iantosca | Senior Director of Content Platforms and Knowledge Engineering, Avalara

Creating Content Central

The vision of "Content Central" is to have a platform where each functional content creation team can plan and monitor their content activities within a unified system of record. This digital hub orchestrates content operations from inception to publication, incorporating centralized services, streamlined workflows, communication and alerts, role-based access control, and comprehensive permission management. Moreover, Content Central is designed to seamlessly integrate with and enhance the efficiency of the content creation and distribution pipeline.

However, Michael notes a significant hurdle in realizing the Content Central vision: "A system of this caliber doesn't yet exist in the commercial market. We've taken on the challenge of developing it ourselves."

Michael acknowledges that his team can build upon the significant advancements from the expanding market of content technology and system providers. These offerings span authoring, content management, delivery, localization, and semantic systems, and while customization remains necessary, these sophisticated systems come equipped with connectors and APIs, simplifying integration, and enhancing functionality.

By spearheading the development of Content Central, Michael says, "My goal is to highlight the market potential for such a solution, convincing suppliers of its viability and the genuine need within the content community."

At the same time, Michael cautions that, "It's time for suppliers to rise to the occasion. Otherwise, the community might need to band together and build an open-source solution that's free from proprietary constraints.”

Leveraging Generative AI and Hyper Personalization

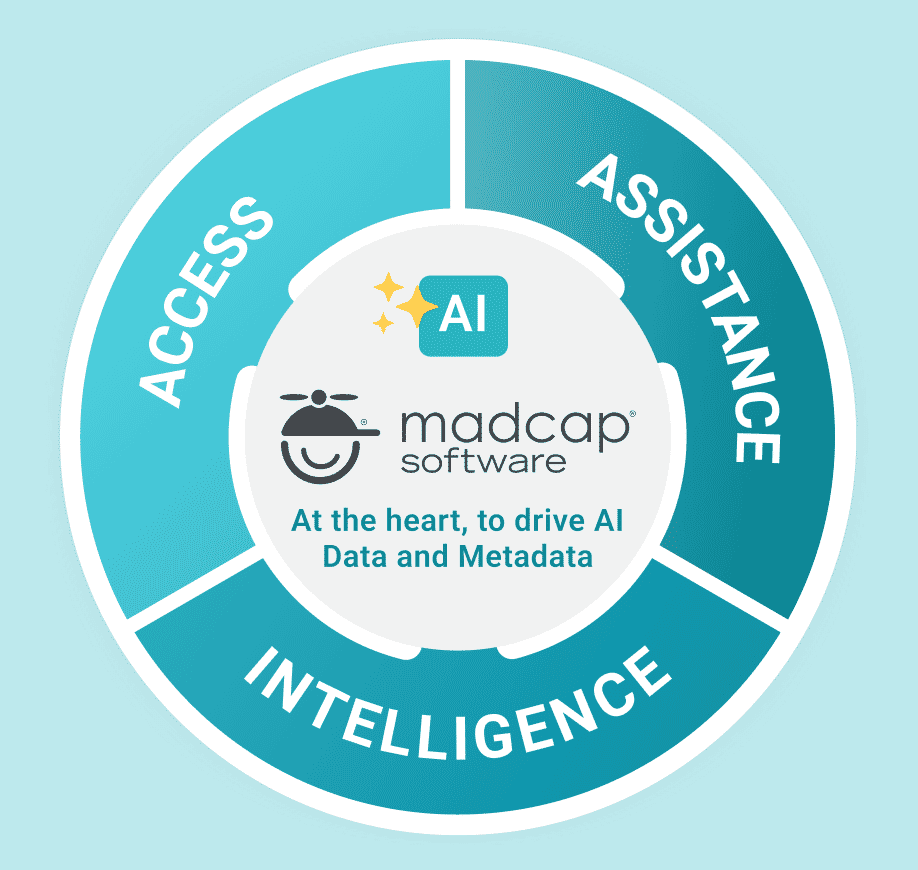

Another element of Michael’s endgame is retrieval-augmented generative AI (RAG) and hyper-personalization, which he has been writing and talking about for several years in multiple conferences, papers, and webinars—long before ChatGPT came into the public limelight.

Michael began working with AI in the early 1990s when AI first focused on expert systems, then branched into computational linguistics-based AI, followed by IBM Watson which was then in its infancy. He became intrigued with knowledge graphs in 2015 after being inspired by his late, esteemed IBM colleague and friend, James Matheson, an accomplished author renowned for his seminal works on SEO.

"James introduced me to knowledge graphs in 2015 only three years after Google introduced them, and it was a revelation," Michael recalls. "The potential of knowledge graphs as the future of AI-driven content struck me with profound clarity."

Despite his enthusiasm and multiple attempts to persuade his previous peers of this vision, Michael's knowledge graph proposals were met with resistance. Undeterred, Michael found in Avalara a genuine commitment to valuing content and willingness to invest resources to elevate content strategy to its rightful place.

“Avalara became my orange oasis," Michael says. “Now, it seems everyone is discussing knowledge-graph-driven RAG. Welcome to my world!”

Explainable AI (XAI) is the goal. While public generative AI solutions are terrific, they are not trustworthy enough for companies that require precision and cannot afford them to hallucinate and be wrong when it comes to sensitive services for which companies, or their customers can be held legally or financially liable.

Michael Iantosca | Senior Director of Content Platforms and Knowledge Engineering, Avalara

A Semantic-Powered Future with Knowledge Graphs

The Avalara content supply chain, now reaching maturity, is the foundation of what Michael calls Avalara’s enterprise Knowledge Base, which unifies content across the enterprise. It serves as the single source powering in-product user assistance for all Avalara products, the Avalara Global Support portal, the Avalara Knowledge Center, content for developers and partners, and now, Avalara’s generative AI help and support chatbot solutions.

“This is when the fun begins,” Michael says. He has been many steps ahead working on next-generation models to make ChatGPT reliable, accurate, and explainable.

“Explainable AI (XAI) is the goal,” Michael insists, “While public generative AI solutions are terrific, they are not trustworthy enough for companies that require precision and cannot afford them to hallucinate and be wrong when it comes to sensitive services for which companies, or their customers can be held legally or financially liable.”

Most companies have large private collections of curated content on which generative AI solutions, such as chatbots, can be trained, but Michael says that this is not enough. They need to be augmented with external, curated knowledge bases that can fact-check and provide the sources from which answers are generated.

“Large language models cannot do it on their own as they are based on generating the next most likely word based on patterns captured using linear algebra,” he explains.

Moreover, Michael states, “Generative AI is amazing at creating novel content. It can regurgitate recorded content in new and innovative forms. What it cannot do is create novel knowledge. It cannot take what only exists in the human brain and use it without someone first extracting it and training the machine.”

Therefore, Michael says, it is up to content professionals to capture knowledge and put it into a knowledge graph that can then be mined in conjunction with the generative AI results to perform fact checking, gain the ability to inference and reason, ensure referential integrity, and provide the required degree of explainability and confidence expected.

“Once you get into the nuts-and-bolts of it, generative content is far more the domain of content and knowledge management than programming, and content professionals need to lead that partnership with engineering,” Michael observes.

Practical Applications of Knowledge Graphs

But why use knowledge graphs? Businesses today grapple with vast amounts of data, often overwhelming traditional databases ill-equipped to manage complex relationships. Knowledge graphs, paired with knowledge bases, excel in representing intricate multi-dimensional relationships, offering a solution. They organize, integrate, and leverage data effectively, enabling enhanced integration, discovery, and contextual understanding.

Companies stand to benefit from the agile and scalable data architecture, improved governance, enriched customer experiences, and accelerated innovation enabled by knowledge graph databases. Implementing this technology unlocks insights, drives better decisions, and positions companies at the forefront of content and data-driven excellence.

With that in mind, Michael’s team set out to prove that it is not only possible but practical to take large volumes of content, such as an entire corpus of a company’s technical documentation, and automatically create and maintain a scalable knowledge graph that can then be used to augment a generative AI solution. It worked. Now, by taking structured content, or by wrapping unstructured content in structured wrappers, Michael and his team can generate and maintain scalable knowledge graphs with no manual intervention. Moreover, the graph’s capabilities become even more powerful when additional metadata is applied in the form of taxonomy labels using a semantic management hub.

Michael has found that a knowledge graph is also an effective tool for enhancing large language model (LLM) responses because the meaning of the data in the graph is encoded in the data structure itself. Unlike LLMs, which use statistical models to predict sequences of words, knowledge graphs can “understand” these word sequences due to semantic metadata. So, when a user inputs a prompt or search argument as they normally would with an LLM, the input is instead routed to the domain-curated knowledge graph to produce a more accurate and relevant response.

The following model shows one example of how a knowledge graph can augment a generative AI system and LLM for content search and retrieval. It combines several methods and processes for a repeatable and maintainable solution.

Looking Ahead

Michael says when it comes to the application of generative AI and knowledge graphs for user assistance, we haven’t seen anything yet. But it is not about bigger language models with billions more parameters; it’s how we apply them. He also believes that the value will no longer be in the content; value will be in the knowledge. And he predicts that we will soon see an avalanche of what he calls Do-with and Do-for generative agents that transform the user experience by combining content with controlled knowledge.

“For decades we’ve been dreaming of the day when we can move from prescriptive content to truly dynamic personalized content, on the fly, based on what the user is trying to do at that very moment. However, what content professionals have been calling personalized content hasn’t been truly personalized; it’s been personified,” Michael observes. “Now, true, individually personalized dynamic content is finally within reach.”