This article offers a conversation between two tech writing business owners and how they’ve promoted and maintained collaborative document review processes among their respective clients. Whenever practicable, they’ve shifted to the cloud to create and maintain document review processes that work for all members of a review team, resulting in better outputs and improved end user results.

What kinds of product publications do you produce?

Ann: Over the years, I’ve worked with clients in various industries and departments, such as engineering, software development, manufacturing, and marketing. Consistently, I find that clients want PDF file output, even if the primary output I’m producing for them is HTML5 web help.

Nita: Same! I’ve touched a broad swath of subject matter: manufacturing including automotive; medical device and bioscience; legal; software and tech; utilities; transportation; and professional standards and accreditation. Mainly my clients distribute PDFs (both marketing and technical documentation) and HTML5 help systems (with one .CHM holdout). One client also publishes training courses.

Do you conduct collaborative document reviews?

Ann: Yes, whenever a client requests a review. As much as I try to promote a truly collaborative document review process, sometimes I opt to use the client’s preferred review process, even if I know that process will make it harder for me as the writer.

Nita: Likewise. At least, I’m working hard to promote collaborative reviews. I’d rather have all reviewers look at the same content and see one another’s feedback, than to process separate sets of feedback, sometimes conflicting.

What tools do you use?

Ann: I’ve found these collaboration tools to be helpful:

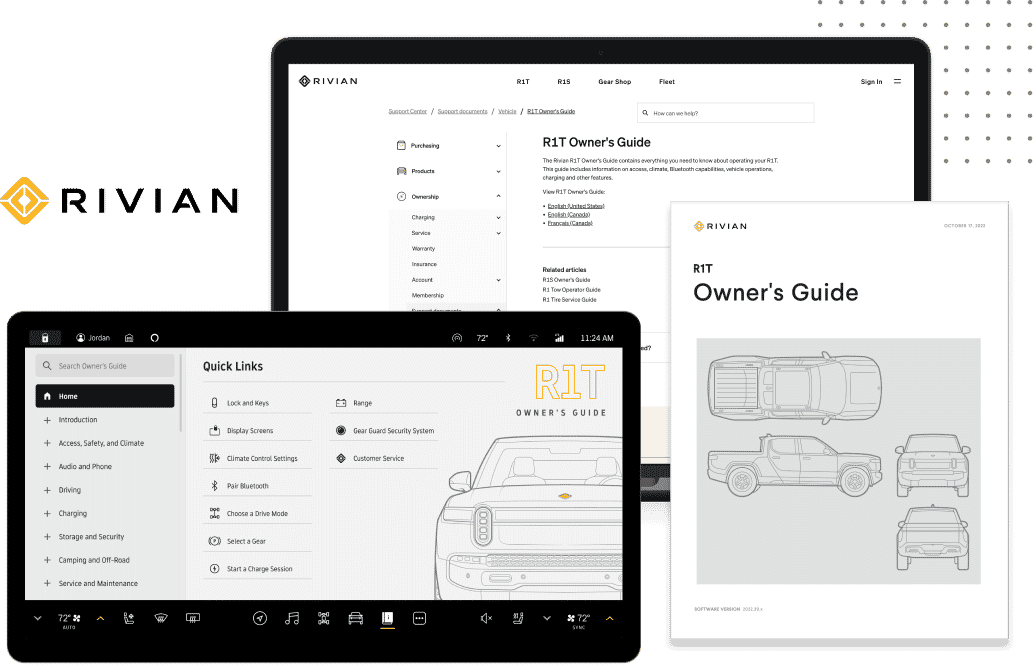

- MadCap Central – I can send content to multiple reviewers, right from within Flare, and then process the changes back in Flare. Each reviewer logs into Central, locates the topics to review, and reviews them using a lightweight editing tool to add or delete content or to insert comments. All suggested changes are saved automatically, and every reviewer can instantly see the document editing others have made in real time. When all reviews are submitted, I process the feedback right within Flare.

- Emailed Word files or PDFs – Word files are attractive to corporate reviewers because of familiarity with Word’s Track Changes feature. PDFs are useful because they show the document’s final formatting. I’ll typically send both the PDF file and the Word file, and I’ll ask for the comments to be made via Track Changes in the Word file. This process is straightforward when there’s only one reviewer, but it degenerates into chaos when there are multiple reviewers. Reviewers email marked-up documents back and forth, and getting consensus is slow and frustrating. Then, I have the headache of deciphering and resolving all of the conflicting review comments.

- HTML files posted on an internal file server, with a link to the files emailed to the reviewer – For content posted this way, the reviewer typically returns feedback in either a Word document or just an email.

- Google Docs – This is a great approach when a reviewer wants to proactively provide changes or feedback on existing content or provide a large amount of new content.

Nita: Sounds like we share the viewpoint that our jobs are to adapt to reviewers’ preferences while simultaneously leading them to best practices. I’ve used all the same techniques you list, particularly MadCap Central for topic-by-topic reviews.

I also deliver PDFs and Word docs for review, but not via email to avoid having reviewers work in isolation. Instead, I put files in the client’s preferred cloud-based place so that all reviewers can mark up the same files. We’ve used:

- Box

- Dropbox

- OneDrive

- Google Drive

- Microsoft Teams (for which each review draft has its own channel, with files attached)

- Huddle

Sometimes, I’ve used Slack to distribute a shared document for review. Each review draft has its own thread, with the files attached. The problem here is that reviewers tend to download, edit, and upload their feedback, thwarting my collaborative review goals. (Hmmm, now that I think of it, I should integrate MadCap Central + Slack. 😉)

When all else fails, I hold a 30-60min virtual review meeting, typically with no more than three reviewers. I share my screen and dive into the Flare project in whose topics I will have placed questions. Before the scheduled meeting, I will have sent out a Flare-generated PDF with these “SME questions” shown to let the reviewers see the formatting. Unfortunately, not all reviewers will have done their “homework.”

What were your original goals for collaborative document reviews?

Ann: Having a collaborative review process keeps everyone on the same page, literally and figuratively. Most review content is not contentious, but there’s always that small fraction of content that generates a lot of discussion. When multiple people need to weigh in on some content, it’s essential to have a team collaborative process in place that enables all parties to contribute easily.

Another important goal is ease of integrating changes into Flare. When review input is returned in marked-up PDFs or Word documents or in email, it’s time-consuming to process and integrate all SME feedback.

I think MadCap Central is one of the best tools for collaborative reviews, for two reasons:

- All feedback is available to all reviewers, so everyone can see all contributions.

- Processing the review input is easy because Central is so tightly integrated with the Flare.

Nita: My goals are similar, Ann. They boil down to more efficiency and better outcomes.

First, I’ve wanted to:

- Reduce the number of returned reviews to be sifted through

- Avoid putting writers in the position of the arbitrator to contradict feedback and requests

Second, I’ve used collaborative reviews to put persistently non-responsive, recalcitrant, or downright bullying reviewers on notice. When everyone can see that someone has either not responded or has responded in a rude or aggressive way, the collective weight of the review team can be brought to bear to encourage cooperation.

Third, for those reviewers who adapt well to topic-based reviews, I’ve wanted to eliminate the need for copy-and-pasting or manually entering changes into Flare from another collaboration tool. MadCap Central is ideal in this regard!

Has moving to collaborative document reviews achieved the goals?

Nita: Yes! And on all counts, too!

Ann: Yes. Having a tool such as MadCap Central really makes a difference. It saves time for both the reviewer and the Flare author, and it helps ensure that input is accurately processed (rather than error-prone copying-pasting or retyping input that happens with other review processes).

How do you train your reviewers?

Nita: Seems like everyone knows how to mark up a PDF, track changes in Word, and/or make suggestions in Google Docs, so little training is needed. That’s a major driver to my “going with the flow” with those review formats.

With MadCap Central, I’ve had several successes and one failure (which I’ll talk about later). The successful introduction of Central has been with client teams that are technically savvy. Being web-based businesses, they’re used to operating in the cloud. And given that they’re now mainly remote-first, they’re used to collaboration tools. Furthermore, they’re used to content being delivered as standalone web pages/articles. I’ve just had to demonstrate Central’s Review features, and the reviewers have dived right in.

Back to troublesome reviewers, I discuss expectations during reviewer training. I explain that, although we professional writers have thick skins and know how to handle negative constructive feedback, we will not tolerate rudeness or abuse.

Ann: For new MadCap Central reviewers, I conduct a short demonstration. Thirty minutes to an hour is sufficient for this training meeting. For reviews conducted via email, I put all the review details in the email (due date, how to return review comments, how to mark up the files).

Nita: Good point about setting due dates. During reviewer training, I emphasize that feedback will be expected within 2-3 staff days, regardless of the format or delivery mechanism.

Any unexpected challenges?

Nita: My MadCap Central failure happened years ago when Central’s Review features were first introduced. I understood its potential and was really eager to try it. But I misjudged my client, a utility company for whom a team of a dozen business writers and analysts were contributing to a very long biennial report. These writers were accustomed to writing and reviewing in Microsoft Word, and despite my writing and illustrating a whole guide for them (in Flare!) about using Central for reviews, they simply struggled. We reverted to Microsoft Word, and I learned a hard lesson. Just because I was acclimated to topic-based authoring, I was naïve to think that non-technical writers could adapt to topic-based reviewing.

Even with the Central successes, my subs and I have had a few challenges. We’ve learned to send out a small batch of files at a time, to ensure that we don’t overwhelm reviewers. And because we must avoid touching topics in Flare that we’ve sent out for Central review, we don’t want to hamstring ourselves by having too large a set of topics in the out-for-review state. Sometimes we’ve spawned file conflicts when we’ve made some global edits (such as a find-and-replace operation) and inadvertently touched topics that were still out for Central review. Fortunately, the conflicts were easy to resolve.

Ann: The challenges of conducting “old style” reviews are those we’ve all experienced for many years (such as having to copy/paste or retype review input). Using a tool designed for collaborative reviews, such as MadCap Central, has really alleviated most of those challenges faced by technical writers.

One aspect of the Central workflow is that the review process must be initiated in Flare. If a reviewer wants to proactively provide input, they must first ask the Flare author to send the appropriate topics for review. I’ve solved this problem by asking my reviewers/collaborators to provide unsolicited input via a Google doc instead.

Any unexpected rewards?

Ann: I was pleasantly surprised by how easy it is to use MadCap Central. It really does integrate well with Flare and solves so many of the review process problems that we as technical authors have struggled with for years.

Nita: With old-style reviews, reviewers tended to think that reviews were a bothersome chore. I’ve found that collaborative reviews have promoted a sense of joint ownership of the content. Everyone feels invested in the project document, resulting in improved document quality.